Peter Sloan, Department of Physics

In this case study (from accounts first published on his blog), Peter Sloan discusses the implementation of randomised coursework, followed by an evaluation over two years looking at the outcome on exam performance and considering additional questions such as bias in the outcomes.

The Coursework

First published on Peter’s personal Blog:

I have taught 1/2 of the 2nd year course "Quantum and Atomic physics" (PH2013/60 if you care) for three years. My section, 5 weeks long and 15 1-hour teaching sessions takes the students from solving the Schrodinger equation for a hydrogen atom, through to spin-orbit interactions, all the way to term symbols for multi-electron atoms. What I have felt is missing, and what I have failed to find time for, is to demonstrate that the large and unwieldy 3D operators really do work and really do return the energy, or the angular momentum or whatever it may be. Therefore I have designed a new piece of coursework for this coming year: A question-sheet for answers to be handed in and marked.

Why is this worthy of a blog post? Well for the class of 160 2nd year students the point is to get each one to sit down and do the questions. None will be too hard, though many will involve fairly tortuous mathematics. So the aim is not to get marks, but to try the problems. Since this is a hand-in course work there may be a temptation to simply regurgitate a friends answers. Hence I have introduced some random element into the mix.

The sheet consists of three main questions, with each having an array of sub-questions. For each students I wanted each main question to be randomly assigned a possible wave-function (i.e., starting point) out of a catalogue of 8 wave-functions. No two (within reason) problem sheets will be the same. Students will therefore find it hard to copy from friends, but will be able to apply the methods to their own version of the problem sheet. It will also mean they will be exposed to three different wave-functions and get a feel for what they are.

But we can do more. Using some cunning Matlab and LaTeX coding, each question sheet will have printed on it the unique exam code each student has. I have no access to the data base that has the key to transcribe code into name. This course-work will therefore be as anonymous as the exams are, removing any potential subconscious bias. This is especially important as after this year's trial I hope to make this course-work count towards the mark for this course.

The practicalities are that I cannot handout individual sheets, it would take too much time. Instead the Matlab/LaTeX code generates a pdf for each student that comprising the question sheet and a cover-sheet. This will be uploaded to our on-line teaching forum for this course. The cover-sheet has a marking grid and also the student's exam number. They can simply staple this to the handwritten answers. At a click of another button I will be able to generate bespoke solutions sheets for each students, again uploaded to the on-line teaching resource.

One downside is that there are 13 questions, and 8 possible starting points so I require individual 104 solutions. That is what PG demonstrators are good for! And I will have a bit of marking to do.

What effect did my new coursework have on exam outcome?

First published on Peter’s blog:

Abstract:

Those that handed-in the coursework had a higher mark in my bit of the exam (76%) than those that did not do the coursework (57%). This uplift was true for all students no matter what their overall all grade for the year was. The students who benefited the most were those that had an overall year mark in the range 40 - 70 %. If we assume that exam mark is related to understanding (which I try and test in my exam questions) then the coursework succeeded in it purpose.

Let’s start with a quote or four:

“The unmarked coursework section was strangely enjoyable…”

“When I got n=3, I did a little dance!”

“The coursework was a fantastic idea …as a mathematician it gave me a concept boost…”

“I can now differentiate anything”

Last summer I decided to jazz up my 2nd year Atomic Physics course. This is taken by Physics, Maths and Physics, Natural Scientists and a few others, in total about 160 students. The coursework involved extensive calculation and mathematics (which there is no time to test in an exam). Here I want to explore and present some measurable outcomes of this formative assessment.

Did anyone do it?

Out of a total of 154 students 85 did the course work. So just over 55% submitted. Considering this is not a summative assessment (it does not count towards the final mark) I was quite surprised at the high submission rate. In the week preceding the coursework deadline, the Department work-spaces were full of students frantically working their way through lengthy (but relatively straightforward) mathematics. I should also admit that I had told the students that there would be one exam question nearly identical to one of the questions in the coursework sheet.

After the submission deadline, I surveyed the students asking why they did or didn't do the course. Out of the 69 who didn't do it, 7 responded to the survey all citing that they were busy with other things that did count, e.g., placement preparation, lab reports, and that they would use the coursework as exam revision when they had the solutions to guide them.

What were the raw results?

Like all coursework one can get a bit carried away with getting all the marks. This may have been the case here, with an average of 72 % and a spread (std) of 21 % - see graph.

We also see that many who engaged with the work scored high marks, which must have taken quite some time and tenacity. One student even managed to get Maple to do most the work for them.

How does this compare with the exam mark?

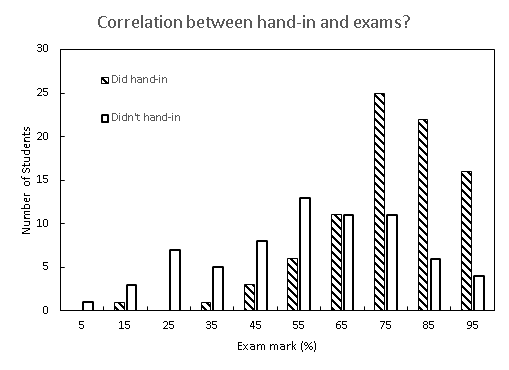

Here I only examine only the portion of the relevant exam that I set (32 marks out of a 60 mark exam). The following graph shows the distribution of exam marks for those that did the coursework and those that didn’t.

A fairly stark difference. Those that did the coursework had a mean mark of 76 ± 15 % and those that didn't had a mean exam mark of 57 ± 22 %. Both good, but significantly higher for those that did the coursework.

Why the exam difference?

To determine out that’s going with an experiment I usually run all sorts of test and background checks, but with this student data I’m a bit limited.

(1) Was it all down to the questions from the coursework that appeared in the exam?

The question was worth 7 marks out of the 32 marks for the exam. Those that did the coursework got 1.5 marks more for this question than those that didn’t. Not enough to explain the overall difference in the exam. In fact, those that did the coursework scored consistently higher on all the exam questions.

(2) Were the students who handed-in just overall the better students?

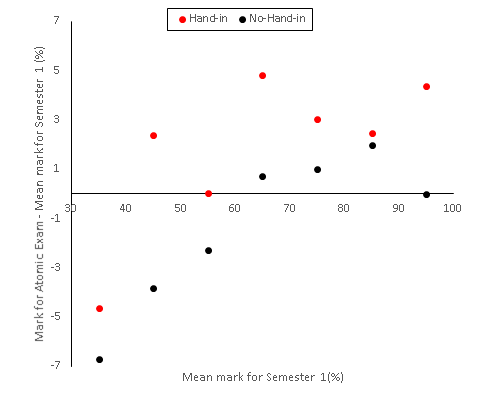

It may be that the set of students who bothered with the coursework were in effect self-selecting and only the strong students submitted. To get a decent picture as to what’s what I have looked at the difference between the exam mark that student got and the student’s overall exam average for semester 1. In effect, whether they did better in my exam than they averagely are. This is done for both the cohort who did the course work and those that did not and we plot it as a function of the overall mean mark for semester 1.

So we see that the best students with high mean marks had a 4% boost in my exam if they did the course work, and were on their average if they didn’t. Overall we see a boost on at all levels for those who did the coursework. This is especially true and the 40-70 % range. So it seems that the coursework really helped the “weaker” students, but all students had a benefit from doing it.

What did the students think?

Out of the 85 who submitted the coursework 32 responded to a survey.

- They overwhelmingly thought it was a useful exercise.

- They agreed that it was about the right length.

- They were mixed as to whether it should change from formative to summative.

The official end of unit official survey gathered four text responses that mostly suggested the coursework was a bit long and clashed with other lab-reports etc. I should note that the teaching scores I received for this course were the highest for any physics course (all years) run during semester 1 of the 2014/2015 academic year.

What’s next?

For next year I’ll run the whole exercise again and again it’ll be formative. But I will shorten the activity by rearranging the wavefunctions (the starting points) for the questions. If I show the exam-marks graphs, perhaps a few more will be inspired to do the work, but then that’ll skew my data for next year’s analysis! Oh well back to paper writing now.

Update:

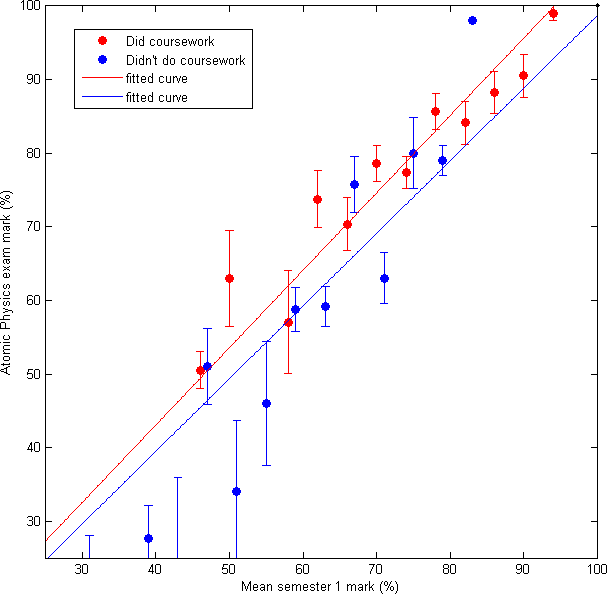

@andydolman suggested a nicer way to exploring the results. So here are the Atomic exam marks against mean semester 1 marks. The results are binned into 4 % wide semester 1 marks to get a mean and std of the mean for each bin.

Again we see that the students with lower overall semester 1 marks are aided by the coursework, but that many of them didn't attempt the coursework at all.

Year 2 analysis

What is it?

A second year course in Quantum and Atomic Physics run in the first semester of the academic term with just under 200 students, mostly physics students, but some Natural Scientists, some Maths and Physics and some straight maths students. The course is challenging and mostly all new physics. How to get the students to play with the mathematics core to the course which is not really suitable for an exam questions? Answer: course-work. The questions are designed to aid a deeper understanding of the physics. The course-work is formative, that is it does not count towards the final unit mark. I get about half the students submitting their work. The feedback I get is positive about the coursework.

Did it have an effect?

What to measure? I can measure the mark the students got for my bit of the Quantum and Atomic Exam and the overall average marks the students got for the semester, both for those that did the coursework and those that did not.

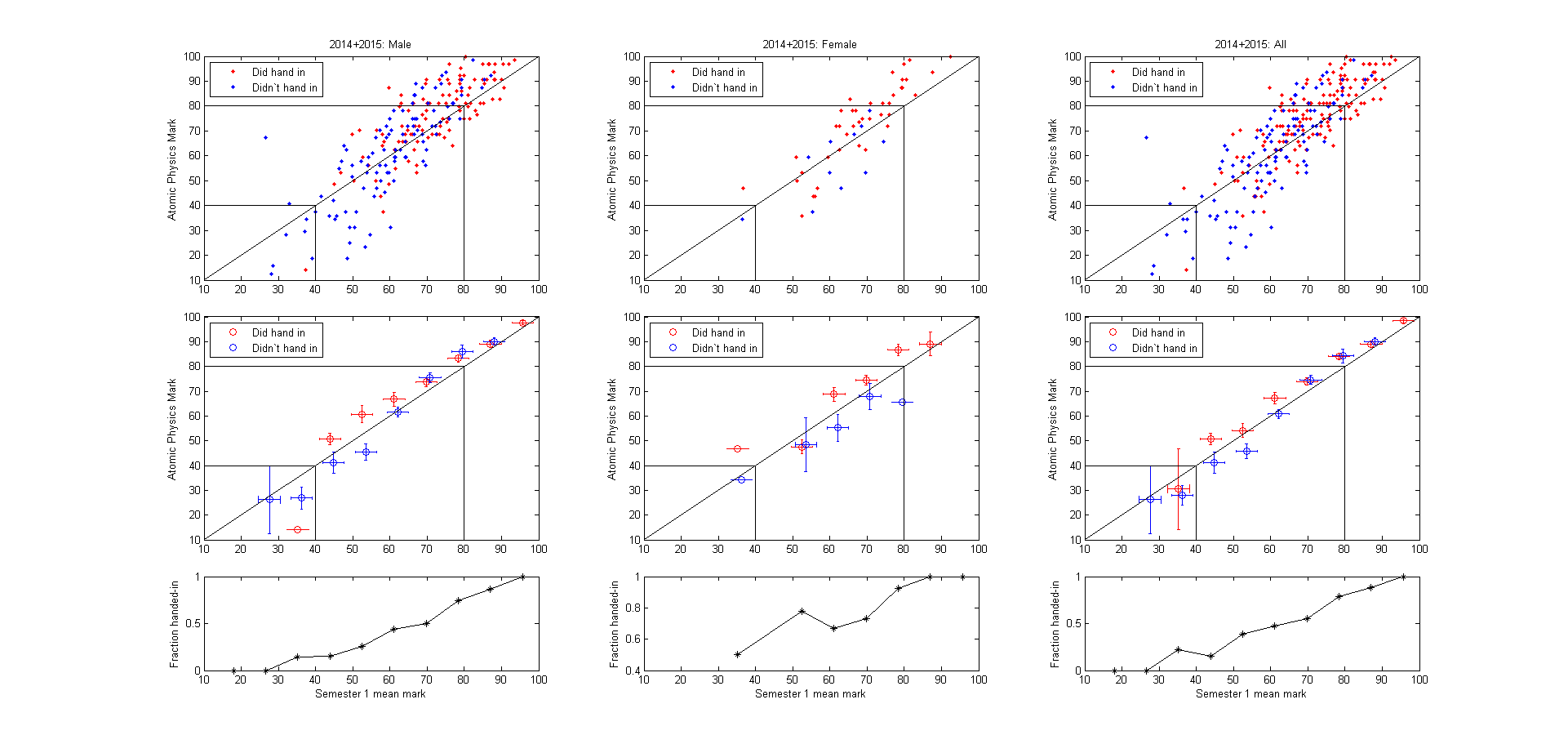

Click on the graph to see them at a more useful size. These charts show two years' worth of data.

- Students that have, monotonically, a higher overall average mark for the semester are more likely to hand-in the coursework, although students below an average of 40 % didn't do the coursework at all (bar 2 students).

- For students with an average mark above ~ 80 % the coursework makes little difference.

- Between 40% and 80% there is a reasonably consistent trend that those that did the coursework gain a higher atomic exam mark than those equivalent students (same average semester mark) that did not. The mean difference between hand-in/no-hand-in is (8 +- 2) %. Quite a bump up the mark scale.

Perhaps if we could similarly analyse the various problem-sheets as well we could see the bit by bit gain of marks that engaging with the course and the problems to solve gives.

Conclusion

The coursework is effective (to a degree) in providing a deeper understanding of the underlying physics.*

------------

Many thanks to Kristina Rusimova for organizing the data.

*Assuming that is what the exam tests**.

** Another story.

Is my 2nd year coursework sexist?

As part of a drive to remove the gender imbalance in the sciences the Department of Physics at Bath is involved with the AthenaSWAN program. One item of note from the analysis of the degree outcome (sorry I can't find a link for that) has a slight (and perhaps statistically insignificant) imbalance in the degree outcome and gender. So the questions I wish to address here is was the coursework I set sexist? It is heavily based on the maths of quantum physics, and was so abstract as to have, to my mind, no gender implications.

The data:

Here are three sets of graphs for the combined 2014 + 2015 cohort, for males, females and all students.

What we see:

- Our women students are more likely to submit the coursework.

- The exam mark boost attained by completing the coursework was, to a large degree, consistent for women and for men.

Conclusion: The coursework is indeed gender neutral