Last month I went to the Institutional Web Management Workshop (that’s IWMW for those of us who enjoy sounding like we’re speaking in tongues). This involved lectures, workshops and masterclasses by a great bunch of speakers from web-related roles in UK higher education. I took away a lot from the three-day conference so I'm going to share some of it with you lucky guys. Here's what I learnt about how the University of Birmingham is carrying out user testing.

User testing; we all know it's important and we all know we need to do more of it. But too often it gets bypassed because of the amount of time, resource and people we think it should involve. I’ve had some experience on both sides of the user testing coin at Bath so I was interested to hear what another university was doing (and if I could shamelessly steal their methods). The workshop ran by Albert Gugliemi, web editor at Birmingham, gave a rundown of the uni's early forays into user testing and some best practice tips.

5 is the magic number

First thing's first: to get good results from user testing you need to test as many people as possible. The more the merrier, right? Wrong! At Birmingham, they test 5 people at a time and no more. Referring to an article in Nielsen Norman Group, testing small groups can yield fairly comprehensive results. In fact, you can identify up to 85% of usability problems with just 5 people. This is definitely enough to be getting on with. And once you've fixed all those usability issues, you can go back out and test again.

Of course, the real benefit for us is that testing small groups of users makes the whole process faster, more affordable and manageable. The University of Birmingham can testify to that.

What's my motivation?

Once you have your 5 users to test, the next step is to assign them personas depending on what you want to learn about your website. In Birmingham's case, they were trying to find out more about how easily users could find course information. They give their users personas based on things like nationality, course type (e.g. UG) and subject area. This helps put the series of tasks they'll be asked to perform into context.

I'll be honest, I'm still not 100% sold on this. I think it could be problematic asking people whose first language is English to act as if it's not. The same goes for asking a user to pretend that they have expertise in a niche area of research. They're not going to be familiar with the terminology and finding the relevant content could be a lot more difficult for them than an expert. And this could affect the reliability of the results. However, I appreciate that you have to start from somewhere and if you're trying to test specific user needs and journeys, this is a way to set the scene.

(I'd be interested to know what other user testers out there think of this approach.)

Learn your lines

If you're carrying out testing, it's important to have a clear script and framework for the session so that the user understands fully what they have to do and why. It also means your testing is consistent and avoids accidentally leading users. At Birmingham, they use a script based on one created by web usability expert, Steve Krug.

We went through this script during the workshop where we carried out some live testing. Posing as prospective students, we were asked to find information on courses and research. We started each task from Google and not from within the university website. This is a great reminder of how most of us use the internet. Sometimes, it's easy to get caught up in the visibility of content on department landing pages when we should also be thinking about our visibility on google search engine results pages.

Realise the truth

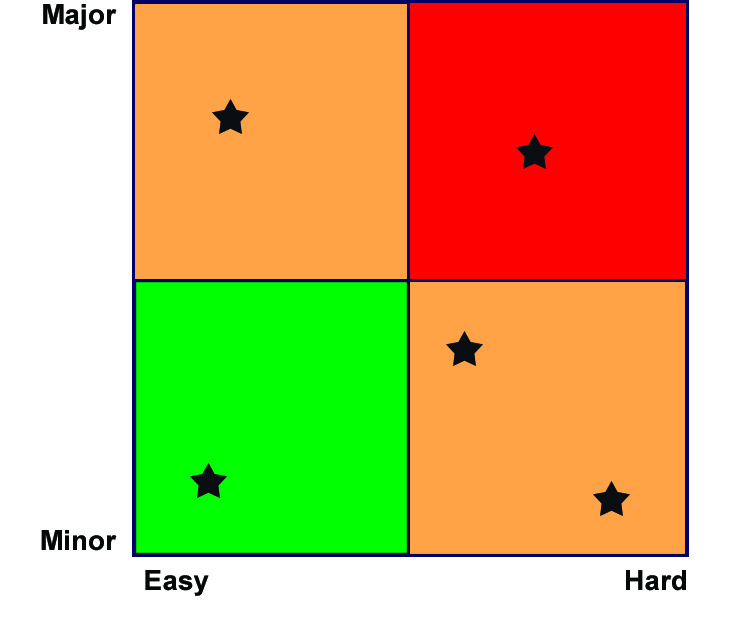

The next step is to collate all the issues raised during user testing and build a prioritisation matrix. This involves plotting problems by how minor/major they are against how easy/hard they are to resolve. And if you haven't seen what a prioritisation matrix looks like, here's one I made earlier (prepare to be dazzled).

This gives you a good basis from which to start building your plan of action. As a general rule, you'd usually start with the major problems that are easy to fix. The matrix also gives you something visual to present to stakeholders so that they can understand the level of work involved in fixing the problems. Don't underestimate the impact of a pretty chart.

It's a wrap

So you've carried out your user testing and you've prioritised your issues. You've analysed the results with stakeholders, put a plan of action into place and reviewed progress. "So what happens now?" I hear you sing (if you're still here). More testing? Definitely!

Just because you've got a set of results and made improvements doesn't mean that you shouldn't revisit and test the performance of your content again. You can and should take an iterative approach to user testing. And, if you're using this small-scale testing model, then it should be easier to do this. The web and how we interact with it is always changing. We have to be able to respond to this to help our users find what they need and have a wonderful journey while they're doing it.

In a nutshell, the top tips I took away from this workshop were:

- have a good script for testing and stick to it

- review results with stakeholders

- set a clear plan of action based on priorities

- review progress

- be ready to test again and iterate

Until next time...