Background Context

In the Department of Mechanical Engineering, Dr Alan Hunter teaches a Year 2 undergraduate unit on computer programming and numerical modelling. Traditional paper exams can be unsatisfactory for this subject area as students are assessed in a less than authentic way. With the introduction of Inspera, the University’s new digital assessment platform, Alan was able to try out a new way of assessing his students and this case study describes that process.

In the past, the traditional two-hour paper-based exam required students to answer three out of four questions, where each question contained four or five related sub-questions. Within some of these questions students would be asked to write code. Coding has traditionally been a difficult topic for students taking this unit and writing code on paper seemed to cause additional problems as it is not the way code is created in practice.

The Digital Exam

A digital exam was created in Inspera using a combination of question types: multi-choice, multiple response, numeric entry and file upload. During the exam period of January 2021 staff were encouraged to only use this limited number of question types so that support teams could provide effective support in such a short implementation period. For future exams the expectation is that there will be other question types available for staff to utilise.

Alan spent significant time rethinking the way the questions were presented in this exam. The aim was to take advantage of the automated marking whilst maintaining rigour, whilst also allowing the code answers to be exported and tested.

The new exam therefore contained the following characteristics:

- 3 compulsory questions each allocated 30 marks

- Some parts to the questions were file uploads for code

- The other parts were either multi-choice, numerical entry, etc.

- 1 file upload question allocated 10 marks specifically to allow students to upload their written workings

Automatic-marking questions (In Inspera)

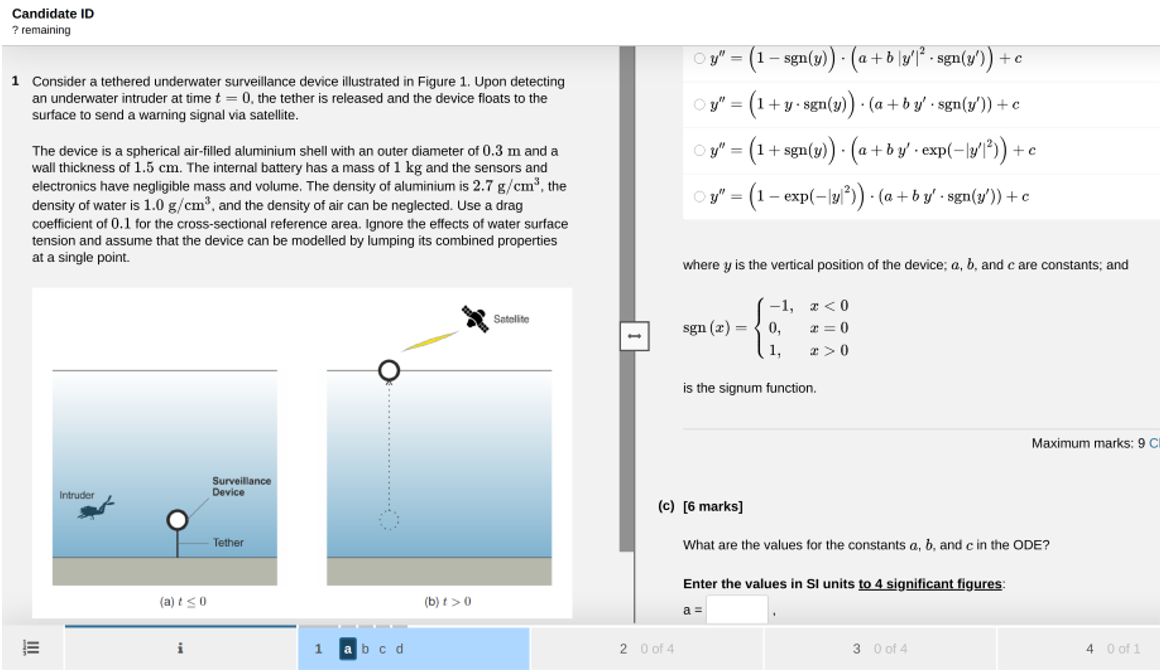

For numerical modelling questions, students were provided with a description of a problem and then asked to derive and solve equations. The choices provided were of similar-looking equations so that students could consider which one was correct. These multiple-choice questions were followed by students having to enter the numerical values to show that they had understood why the equation they chose worked. Alan was able to specify that numerical values should be input to a precision of 4 significant figures and then allowed a tolerance of 3 significant figures for possible rounding errors.

Manual-marking questions

The file upload responses containing the written working provided a way of checking that students had constructed the answers for themselves, and by allocating 10 marks students had an incentive to complete this part. These questions were then assessed on whether the students had worked in the manner expected, i.e., were the workings thorough and neat.

Automatic marking (bespoke)

For code questions, the uploaded files were exported from Inspera and tested against the relevant criteria using a bespoke automated process.

Please note this is a scenario that is unique to Alan and enabled by his coding expertise and not something that all staff would/could do. Marks were allocated depending on whether:

- The code worked as expected

- The program fulfilled the scenarios set out in the criteria

- High quality code was produced

Due to Alan’s coding expertise, he was also able to cross-check student responses against one another, to check for plagiarism. Again, this is unique to Alan’s exam, but in future we expect Urkund to be available for the general purpose of text-matching student work. Using this process Alan was able to detect 7 (from 330) cases where students had submitted similar or identical code.

Results

This digital version of the exam has resulted in a successful assessment, where students were specifically able to undertake a coding exercise that resembles a more authentic task. Students were able to work on the code and test it before submitting. In the previous paper version students would often leave out the coding question completely. Some students did report enjoying the practical nature of the questions.

In terms of marking Alan feels confident that his judgements are more objective and accurate than when he was marking paper exams. Firstly, the time spent on marking was significantly less, a reduction from approximately 80 hours to 20 hours. Automatic marking of multiple choice, multiple response and numeric entry question types reduces the time spent on marking, resulting in more time available to review the quality of student responses to the manually marked questions, i.e., the coding responses.

This is a rough estimate of the breakdown of tasks:

- One day learning to use Inspera interface

- One week to create the question set in Inspera, which was longer than normal because of having to rethink the format

- One day to create the bespoke automation code (specific to this exam)

- Half a day to check the automated marking

- Half a day to go through the uploaded written working from students

Secondly, the results showed an average mark of around 75% compared to the usual average around 65%, however this could be partly accounted for in the change to the open book nature of the exam this time around and the 24-hour examination window.

The automated results were exported from Inspera so they could be merged with the automated marking of the code that was completed offline. This meant the final marks could be uploaded to SAMIS via a .csv file provided by the Programme Administrator.

Digital Exams

As this assessment was successful, Alan would like to run it again in the same format but would also like to consider:

- Analysing the response statistics to see what students found difficult and to identify common errors

- Requesting a feature improvement for Inspera: the ability to change the scoring after the exam has completed, e.g., where a question was deemed too difficult, so that the results can be recalculated

Alan’s main tip for digital exams is to think about redesigning the exam so that the questions remain rigorous, whilst also taking advantage of the automated marking functionality. Paper exams do not always naturally translate to a digital format and may need to be adapted.

You can read more about designing effective online exams from the CLT Hub, or read from others on the use of Inspera. In subsequent exam periods more functionality in Inspera, such as additional question types, will be explored.

Thank you to Dr Alan Hunter who offered his time to share this recent assessment experience.

Respond