Building on the successes of an initial trial, teaching staff are invited to pilot Crowdmark to see if it can help them grade and feedback on students scripts more efficiently and consistently.

What is Crowdmark?

Crowdmark is an online marking platform that is focused on making it easier to deliver efficient, consistent grading and feedback on students work at scale.

Students submit their work through Moodle to a Crowdmark assessment point, which opens up Crowdmark’s marking tools to staff. In addition to standard marking tools, here are 5 features that separate it from other tools:

- Write once – use everywhere. Every comment gets added to a shared Comment Library so to easily give consistent feedback at scale.

- Work as a team: Comment Library items are shared – helping feedback consistency across marking teams.

- Marks can be attached to comments – this helps self- and team- marking consistency.

- Update once, update everywhere. From the Comment Library, you can edit a mark/comment and choose that to update to everywhere it has been used.

- Students can upload from camera roll – this makes it easy for students to submit problem sheets to be marked question-by-question.

Crowdmark also supports many other features including double blind marking, group assessments, scanning support for in-class tests/problem sheets, and support for rich comments (e.g. images/links/videos and type equations using LaTeX syntax).

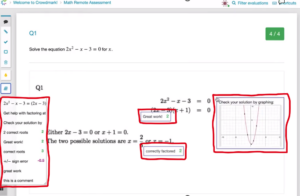

The Crowdmark interface showing annotations picked from the Comment Library (see Crowdmark demo video 8:27).

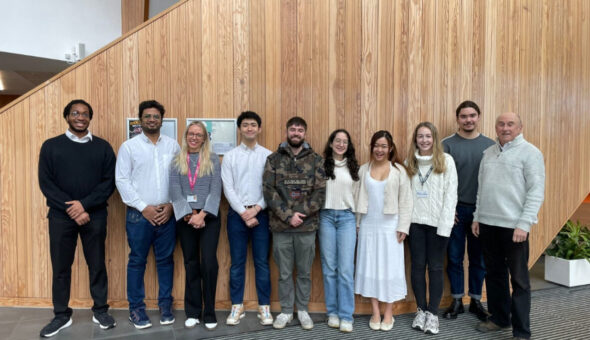

Staff experiences at the University

Last academic year, staff from Chemical Engineering, Mathematics and Physics ran a small pilot with a few hundred students (see Crowdmark Pilot Overview recording) to evaluate how useful and impactful the platform was on assessment and marking. Overall, there was a strong positive response from staff. The key areas that showed the main benefits were around marking efficiency and quality of feedback markers felt they were able to give.

Marking efficiency

- “If we are serious about providing individual feedback to large student cohorts then we need a tool like this."

- “In moving assessments to Crowdmark, it took roughly a third of the time normally taken to grade assessments.”

- You get suggestions from your Comment Library as soon as you start typing.

- Importing comments from Excel/Moodle gives good starting point (and speed things up next year).

- Small/gentle learning curve to getting started.

- Analytics – “I can see frequent comments and easily identify common mistakes."

Feedback quality

- “I could provide longer feedback as this is done only once per comment and re-used.”

- Batch editing comments. “Only after seeing a slightly unusual way of doing things several times did I understand what the students meant to do. It allowed me to navigate very easily back to the other students and adjust my comments.”

- Unit convenors could provide comments for demonstrators hitting the right tone and level of detail.

Watch Tina Duren share her experiences of using Crowdmark (4:27)

Student feedback

Students were given the opportunity to feedback and were largely positive about the submission, viewing their marked work and their feedback. A few comments:

“Way easier than moodle, straight forward and convenient.”

“I liked how I got comments on my work specific and it was very easy to use Crowdmark.”

“We were told specifically which areas we did well or poorly. Feedback comments were useful and not general. Overall I was very happy with using Crowdmark!”

“Feedback on how to improve and exactly where marks were deducted not just comments on general shortcomings.”

Try Crowdmark for yourself

With financial support from the Teaching Development Fund and also the Faculty of Engineering and Design, you (and your students) can test out Crowdmark for yourself. If you are interested in trying this for coursework, problem sheets or class tests, please fill in this contact form or for any questions ask James Foadi (Department of Mathematics) or Josh Lim (Technology Enhanced Learning team). We especially welcome trials during semester 1 and whilst, the pilot to date has focused on STEM subjects, we welcome participants from across the University.

The initial pilot during the 2022/2023 academic year was supported by the Teaching Development Fund.

Respond